By David Gastelum from Arizona State University at Tempe, Arizona, United States

Abstract

Construction industry performance (schedule, budget, and customer satisfaction) has not improved over the last 20 years. This investigation proposes that academic/industry research using actual project data may have more impact on improving industry performance than traditional survey-based research. The authors utilize the CIB and CIB W117 platforms to proliferate the concept of academic/industry test results to increase the impact on the construction industry. The authors propose to use the existing journal and then share the journal papers on an online platform (ResearchGate.net) ensuring a faster proliferation of the key academic/industry test results into the academic research community. The mechanism of the academic/industry test results will have more of an impact on industry practices than the traditional publication systems, which concentrate on literature reviews and surveys to collect industry opinions and analyze the information to change industry practices. The proliferation of industry research results will create transparency in the construction industry and the academic research community.

Keywords: Academic research, Industry testing, Performance.

Introduction

For the last 20 years, the construction industry has not been successful in their attempts to improve their performance (Egan, 1998; Lee, et al., 1999, Horman, M. & Kenley, R. 2005; Egbu, 2008). Research has shown that few academic research units conduct industry tests following the scientific method (Kashiwagi, et al., 2008; Strategic Direction, 2005). In 1994, Sir Michael Latham conducted a study which exposed construction non-performance in the United Kingdom (Latham, 1994). The results of this study caused members of construction academia and the construction industry to attempt to integrate both components (Kashiwagi, et al. 2008).

Despite their attempts, academic researchers in the construction industry were unable to increase the performance of the industry. In 1997, the United Kingdom commissioned John Egan to develop a task force and perform a study on the current (3 years difference) performance of the industry. Egan’s study also identified a lack of leadership in the integration of academic research and industry practices as a cause for continual low performance (Egan, 1998).

Although Sir Latham and Egan sought to create a change in the way construction industry academics remain apart from the construction industry and actual tests, the construction industry has seen minimal improvements (Chikuni & Hendrik, 2012; Oyele et al., 2012; Gregory et al., 2005; Bernstein, 2003).

Studies have been conducted in the United States yielding the following results:

- Over 90% of projects in transportation construction are over budget (Lepatner, 2007).

- Close to 50% of time is used inefficiently on job sites (Lepatner, 2007).

- Projects are over budget an average of 27% (Kashiwagi, 2013).

- 25% to 50% of resources are wasted on a project (Lepatner, 2007).

- Management inefficiency costs owners between $15.6B and $36B per year.

- $4 to 12B is spent trying to resolve disputes and claims (Lepatner, 2007; PWC, 2009).

- Major infrastructure projects typically overrun costs by 50%, some ranged from 255% to as high as 36,000% (Lepatner, 2007).

- In 2015, a study by the Construction Industry Institute (CII) identified only 2.5% of construction projects worldwide are successful (on time, on budget, with high customer satisfaction) (CII, 2015).

International construction management research has existed since the mid -1980s, but in that time, construction management research has not been effective in increasing construction performance. Possible causes may be that the industry and academia do not integrate with each other (operate in silos). The academic research community has not been successful in identifying the problem or the industry is directing the academic research direction and the industry does not know what the solution is.

Problem

Construction management research has continued to advance over the last several decades but performance in the industry is still in decline. Figure 1 shows the increment of publications made in the construction management area since 1938.

Figure 1: Percentage of publications related to Construction Management since 1938 (21,311 publications analyzed in total).

Despite the increased interest in academic publications in the construction management field, the performance of the industry has not improved. The problem may be that efforts made through academic research are not yielding measurable improvements to industry performance.

Traditional Research Methods

In 2011, Graham et al suggested that the majority of research being conducted in the construction field is disconnected from the actual needs of the industry. They concluded by stating that “a review of literature show that, historically, research has not played a major role in the advancement of the construction industry” (Graham, et al., 2011). In the United States, the National Academy of Sciences has stated that the research agenda of the nation as a whole does not cover the entire industry and is unable to identify the research areas that would improve the performance of the construction industry (NAS, 2009).

In 2006, a study by Task Group 61 (TG61) commissioned by the International Council for Research and Innovation in Building and Construction (CIB) sought to identify academic research units that improved the delivery of construction services. TG61 tried to identify research supported by project-based performance metrics, as opposed to literature research and survey data. TG61 conducted a literature search spanning 15 million articles, 4,500 of which were directly reviewed. Of these articles, only 16 identified 3 methods which claimed to increase performance through research testing. These were:

- Performance Assessment Scoring System (PASS).

- City of Fort Worth Equipment Services Department (ESD – FT).

- Performance Information Procurement System / Performance Information Risk Management System (PIPS/PIRMS).

The authors found that PASS and ESD – FT were not supported by project-based performance metrics to validate their claims. These studies are strictly qualitative and do not provide evidence-based methodologies that can be replicated or tested (Kashiwagi, et al., 2009; PBSRG, 2014). While PASS and ESD-FT claim the ability to improve project performance, both studies are not supported by project-based research, so neither of them fit within the parameters of TG61.

A Non-Traditional Approach

The third research unit that TG61 evaluated is PIPS/PIRMS used by the Performance Based Studies Research Group (PBSRG). PIPS/PIRMS is the only research methodology examined in the TG61 study that is supported by project-based performance metrics (Kashiwagi, 2014). Therefore, CIB TG61 concluded that PBSRG is the only academic unit capable of improving the performance of the construction industry. Founded in 1993, PBSRG is backed by the following historic research performance metrics (Rivera, 2013; Kashiwagi, 2014; PBSRG, 2014):

- 1,900+ projects and services delivered.

- $6.8B of projects and services delivered.

- 98% customer satisfaction.

- $17.3M in research funding generated.

- Decreased the cost of services by 31% (average).

- Decreased efforts by the client by up to 79%.

- Identified the highest performing expert at lowest cost 57% of the time.

PBSRG claims that their success is attributed to their ability to recognize and address industry issues directly because researchers focus on validating academic publications through project-based testing. The TG61 study suggests that this methodology is unlike anything else currently being done in academia, making it a very “non-traditional” approach. Nevertheless, this finding led CIB to elevate TG61 to a working commission: W117 “Performance Measurement in Construction,” and partner with PBSRG to proliferate academic/industry results and motivate other researchers to do the same (Kashiwagi, et al., 2009; PBSRG, 2014).

Resistance to New Approaches

Despite the effectiveness of the research efforts at PBSRG, other academic units refused to adopt this non-traditional model. Before their partnership with CIB, in 2005, PBSRG presented its methodology to the National Science Foundation (NSF), which is an organization tasked with providing grants to higher education institutions with innovative research and proven past performance. The NSF denied PBSRG funding and advised them not to resubmit because their methods are “poorly constructed,” and “not relevant.” According to the PBSRG director, this was a common viewpoint at the time. Many high-impact journals were not interested in research done at PBSRG because it was too unique and did not fit within traditional academic parameters

As a result of the common resistance, the research at PBSRG was not adequately distributed to other researchers for effective peer evaluation and examination. In 2014, for example, Xu Jun, a graduate student from China had spent over 6 years conducting research on the needs of the construction industry in China. Jun proposed “guanxi” as one of the main reasons foreign methods and systems needed to be modified before being implemented (Jun, 2014). Guanxi being a form of business relationship deeply rooted in the Asian culture. Dr. Dean Kashiwagi, one of the examiners of Xu Jun’s presentation identified similarities between “guanxi” and “bakshish,” a form of business relationship found in the Kingdom of Saudi Arabia. Dr. Kashiwagi and Xu Jun made the following observations:

- Guanxi and Bakshish are based on business relationships instead of performance.

- Relationships had been identified as inefficient and one of the causes of low-performance in PIPS/PIRMS research. (Kashiwagi, 2014)

- PIPS/PIRMS directly addressed business relationships in over 6 countries for the previous 20 years (Kashiwagi, 2014).

- Xu Jun was not aware of Bakshish (Guanxi was not unique) or of PIPS/PIRMS results.

Uniting Academia and the Industry

While the efforts at PBSRG have not been widely accepted by the greater academic community, several key stakeholders have recognized the effectiveness of their research. Harvard University funded 6 construction tests that utilized PIPS/PIRMS. All 6 of these projects were completed with following results:

- Delivered at lower cost.

- Minimal administrative costs/time (compared to traditional project delivery methods).

- Higher performance rating (compared to traditional).

PBSRG and Harvard University were awarded the CoreNet Global Innovation of the Year Award (Sullivan, 2007) for the results yielded by the tests. The CoreNet Global Innovation of the Year Award is an industry recognition given to high-performing groups for achieving high-performance in otherwise low-performing areas.

The research done by TG61 and the story of PBSRG suggest that the larger academic community might be disconnected to the immediate needs of the industry. Most construction management publications are not based on project-based performance metrics. Research done at PBSRG has been often disregarded as irrelevant. The underlying problem is that academia and the industry are not working in a cohesive and efficient manner that can lead to long-term improvement of overall project performance. The authors propose that this disconnection is a result of the following:

- Modern research is based on survey data, not project performance information.

- Research is commonly published one to two years after the data is collected, which delays industry implementation and further project-based testing.

Proposal

This research proposes that in order to closer unite academic research and the needs of the construction industry, non-traditional methods of research and publications should be further developed. The authors suggest that this objective can be achieved, in part, by creating a journal that is first, supported by performance-based research, and second, easily accessible to both researchers and industry professionals. This journal can bypass traditional publication systems that are often delayed by journal requirements and lengthy peer-review processes. The implementation of this new journal type is as follows:

- Create a journal that concentrates on academic research/industry test results to improve industry performance.

- Identify a method to increase the transparency in research work that identifies “accurate” and “inaccurate” concepts by using research tests with metrics (instead of industry opinions).

- Identify a way to document and proliferate the test information (database, journal publication and secondary publication system).

- Identify the impact of the performance information by documenting the movement of the paradigm into more cultures, countries and other industries.

Methodology

The proposal will be validated (or refuted) through the following steps:

- Request CIB create a platform (journal and website) where performance-based research can be shared. The journal publication of academic/industry test results will minimize inaccurate ideas in academic research.

- Ensure publication of test results in the journal publication at least 2 times from 2015 to 2017.

- Create and identify academic or industry experts to serve as peer reviewers (list to be created prior to the first publication in 2015).

- Create a database of test results to share with experts/researchers. This will serve as a secondary publication system that will exponentially expose the academic/industry test information.

- Integrate the performance information of research tests into real-world projects to increase the number of research/industry tests.

In order to test the effectiveness of this research method, the authors will create a two-year (2015 – 2017) evaluation period. In that timeframe the effectiveness of the new publication will be measured by tracking the following performance metrics:

- Journal publications 2 times per year, or at least improved from the baseline year (2014).

- Expert peer reviewers available (at least 2 per publication being considered).

- Number of reads of work shared on secondary publication system.

- Amount of citations gained through the sharing of test results on secondary publication system.

Results

All research test results listed here-after are directly correlated with a step listed in the methodology, performed from 2015 to the start of 2017, and utilizing 2014 as the baseline for previous performance unless otherwise specified.

Transparency through a Journal Publication

The academic unit conducting PIPS/PIRMS research identified publishing papers in non-CIB W117 journals more difficult and time consuming, shifting their primary focus to W117 as the sole tool to proliferate research tests. In 2014, only 1 issue had been published with a total of 6 research papers in it. Since 2012, the rate of issues and total number of papers published had been steadily declining since its peak in 2012. A new publishing system was implemented at the end of 2015, capable of reviewing, editing and publishing of 11 papers in 2 weeks (W117, 2016). The following years, including the expected publications in 2017 can be found on Table 1.

| Table 1: Performance Data Information with 2014 as Baseline. | ||

| Year | Issues | Papers |

| 2014 | 1 | 6 |

| 2015 | 1 | 11 |

| 2016 | 2 | 15 |

| 2017 | 2 | 14 |

On Table 1, year 2014 is used a baseline, since it is the year prior to the start of the research. One of the goals of the research was to stabilize the number of issues published per year (at least 2). Due to the late approval of the research in 2015, only 1 issue could be published that year. However, the issue contained almost twice as many papers as the baseline year, and it is recorded as having been published, from the time of deadline to submit, in 2 weeks.

A review of every article published by W117 in the Journal for the Advancement of Performance Information and Value (JAPIV) showed over 80% as being case studies or data directly relating to academic/industry testing, causing the JAPIV to now be a recommended journal from the CIB (W117, 2016). Additionally, CIB recognized W117 as the highest-performing working commission at their Annual World conference, citing the innovative approach to proliferating academic/industry information as one of the main sources for their success (CIB, 2016).

Wim Bakens, director of CIB, tasked W117 directors with creating a roadmap other working commissions could replicate to increase the performance and impact of their research. Furthermore, he assigned Dr. Kashiwagi (director of W117) to present at the World Building Congress as well as provide seminars to other coordinators of working commissions.

Experts as Reviewers

The new publishing systems utilizes industry experts and researchers who have previously been exposed to the impact academic testing in the industry can have on improving performance on projects. As of May 2017, 45 CIB members serve as reviewers for the W117 journal. 31% are high-performing industry experts in the field; 69% are researchers from like-minded universities. Some of the member organizations include:

- Arizona State University

- Central Building Research Institute, India

- City of Rochester Minnesota, USA

- Construction Research Institute of Malaysia

- Danish Building Research Institute, Denmark

- Ministry of Municipal Affairs, Saudi Arabia

- ON Semiconductor, worldwide

- RMIT University, Australia

- Scenter, Europe

- The Barlett Faculty of the Build Environment, United Kingdom

- Universidade Federal do Rio de Janeiro, Brazil

- University of Alberta, Canada

- University of Kansas, USA

- University of South Africa

- University of Zagreb, Croatia

- VTT Technical Research Centre of Finland

- Western Illinois University, USA

Utilizing experts who are aware of the importance of industry testing can quickly identify accurate and inaccurate proposals, thus optimizing the review process.

Secondary Publication System

At the start of 2016, the main researcher identified ResearchGate.net as a platform where research/industry testing results could be shared in addition to the W117 website. Prior to this, W117 only possessed their website to disseminate their research results, possessed no noticeable transfer of information to researchers not directly linked to CIB except by direct contact of primary researchers in any given publication.

ResearchGate.net is an online platform designed to ease the sharing of research work being performed around the world. From the start of 2017, ResearchGate has 12M+ researchers registered (about 60% of potential users) boasts of higher activity usage than Academia.edu (other research sharing platform), and easier sharing ability than Google Scholar (an online “platform” composed of databases). ResearchGate has been called “a mash-up of Facebook, Twitter and LinkedIn,” by the New York Times (Scott, 2017). The work of editors from W117 and other PIPS/PIRMS researchers was added to the site at the start of 2016.

The result of adding these documents are as follows (from January 2016 to May 2017):

- 76 full-text articles shared.

- 4,985 reads.

- 86 followers.

- 944 unique researchers reached.

- 252 additional citations (mentions of W117 research work in non-W117 publications).

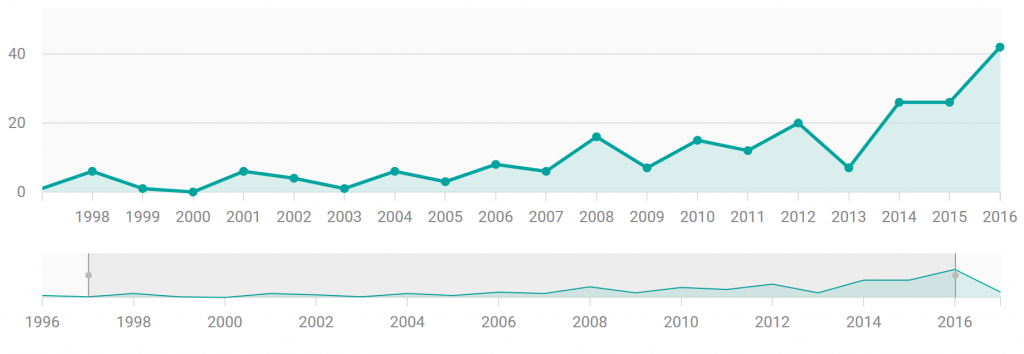

More importantly, the increase in exposure as well as more dominant case studies being reported more recently has led to an exponential increase in work being cited, as seen in Figure 2.

Integrating Performance Information in Proliferation Systems to Increase Test Counts

As of May 2017, 2 years into implementation of the new publishing system, W117 and JAPIV has experienced the following results:

- The procurement and major government organizations in the Netherlands has identified the BVA as the mainstream and most recommend approach to delivering construction and other services.

- Norway has awarded the first project [350M Euros infrastructure improvement] and has 10 projects that are ongoing. W117 will be documenting all tests.

- W117 experts are educating and assisting Polish professionals to run their first tests. State of Utah will reopen academia/industry testing after 16 years.

- JSS engineering university [rated in the top 50 engineering university] in Mysore, India is importing the entire W117 research/education program and plans to implement a BVA Master’s degree to support academic /industry research.

- Education and testing in China and Vietnam.

- Saudi Arabia is implementing the BVA and information technology to classify all contractors and continuously track their project performance.

- Education programs for high schools are flourishing in the Phoenix metropolitan area and online.

- W117 approach has been requested by CIB board as a template for other working commissions who are having difficulty in sustaining and impacting industry.

Conclusion

The amount of research data has exponentially increased over the years in the construction management area. Despite this, the performance of the construction industry has not been impacted for the last 30 years. In 2006, the CIB tasked TG61 with identifying systems which utilized performance-based research tests to improve the performance of the construction industry. Literary research in addition to performance data gathered by TG61 and now W117 shows that academic/industry research testing of “real” cases has more impact on the performance of the construction industry than traditional academic research, which utilizes survey results as the main source of information. CIB, W117 and ResearchGate.net are proving to be the most efficient ways to disseminate information. These 3 platforms ensure a faster flow of information of test results, making it more effective than traditional publication systems in impacting or changing industry practices. The integration of research/industry tests and results with journal publications and a secondary online platform is what now has an impact on the construction industry and its industry practices.

References

| [1] | 108 CIB World 2014, International Council for Research and Innovation in Building and Construction, Available from: <http://www.cibworld.nl/site/home/index.html>. Accessed on October 1, 2015. |

| [2] | Adrian, James J. 2001, “Improving Construction Productivity.” Construction Productivity Newsletter, vol.12, no. 6. |

| [3] | CII 2014, Construction Industry Institute, Available from: <https://www.construction-institute.org/scriptcontent/index.cfm>. Accessed on 3 March 2017. |

| [4] | Egan, SJ 1998, “Rethinking Construction: The Report of the Construction Task Force to the Deputy Prime Minister, John Prescott, on the scope for improving the quality and efficiency of UK construction.” The Department of Trade and Industry, London. |

| [5] | Egbu, C., Carey, B., Sullivan, K & Kashiwagi, D 2008, Identification of the Use and Impact of Performance Information Within the Construction Industry Rep. The International Council for Research and Innovation in Building and Construction. |

| [6] | Jun, X. (2014). Procurement System Selection in the Chinese Building Industry: A New Model. SKEMA Business School. Dissertation, France. |

| [7] | Kashiwagi, D. (2014). The Best Value Standard. Performance Based Studies Research Group, Tempe, AZ. Publisher: KSM Inc., 201. |

| [8] | Kashiwagi, D., and Kashiwagi, J. (2013) “Dutch Best Value Effort.” RICS COBRA Conference 2013, New Delhi, India, pp. 349-356 (September 10-12, 2013). |

| [9] | Kashiwagi, D., Sullivan, K. and Badger, W. (2008) “Case Study of a New Construction Research Model.” ASC International Proceedings of the 44th Annual Conference, Auburn University, Auburn, AL, USA, CD-4:2 (April 2, 2008). |

| [10] | Kashiwagi, J., Kashiwagi, D. T., and Sullivan, K. (2009) “Graduate Education Research Model of the Future.” 2nd Construction Industry Research Achievement International Conference, Kuala Lumpur, Malaysia, CD-Day 2, Session E-4 (November 3-5, 2009). |

| [11] | Lee, S-H., Diekmann, J., Songer, A. & Brown, H. (1999). ―Identifying waste: Applications of construction process analysis. Proceedings of the 9th IGLC Conference. Berkeley, USA. |

| [12] | Lepatner, B.B. (2007), Broken Buildings, Busted Budgets. The University of Chicago Press, Chicago. |

| [13] | PBSRG. (2017). Performance Based Studies Research Group Internal Research Documentation, Arizona State University, Unpublished Raw Data. |

| [14] | PricewaterhouseCoopers (PwC). (2009). “Need to know: Delivering capital project value in the downturn.” Retrieved from https://www.pwc.com/co/es/energia-mineria-y-servicios-publicos/assets/need-to-know-eum-capital-projects.pdf. Accessed April 6, 2017. |

| [15] | Rivera, A., Le, N., Kashiwagi, J., Kashiwagi, D. (2016). Identifying the Global Performance of the Construction Industry. Journal for the Advancement of Performance Information and Value, 8(2), 7-19. |

| [16] | Scott, M., (2007). A Facebook-Style Shift in How Science Is Shared. The New York Times. Accessed May 17, 2017. |

| [17] | Sullivan, K., Kashiwagi, J., Sullivan, M., Kashiwagi, D. (2007). Leadership Logic Replaces Technical Knowledge in Best Value Structure/Process. Associated Schools of Construction. |